Single-domain or Multi-SAN certificates?

December 9, 2023

For TLS to be able to encrypt connections between two communicating devices, by far the most common authentication method is X.509 Certificates. They have been around for decades and have supported the move to an encrypted web.

The job of certificates is to bind two things together: a cryptographic key and an identity. They serve as a document that tells us that, for example, the public key

04:60:4a:74:2e:ea:2b:bf:16:3f:14:2f:c5:26:df:

fe:65:c7:bd:7f:81:b0:48:a7:dd:82:3b:36:ee:28:

0d:2b:2c:06:18:68:aa:9d:c3:b8:e7:73:cb:21:36:

11:b3:ec:f0:ff:ab:77:51:0a:fa:4e:07:27:16:1f:

23:3f:32:71:2e

is tied to the domain daknob.net. It could have been an IP Address, an e-mail

address, or a few other things, but most certificates right now are used with

domain names.

When connecting to a server for HTTPS, this certificate will be served, and if trusted, will allow the client to set up encryption using that key that’s included.

A server however can be responsible for multiple domain names, and certificates have evolved to handle that. This is done by making the resources this key is associated with an array. A single public key can be connected to 50 domains and 12 IP addresses if we like. This array is called the Subject Alternative Name, or SAN.

Now if we serve 20 domains on a single machine, we can either have one certificate with all of them in it, or 20 certificates with one domain each, or anything inbetween.

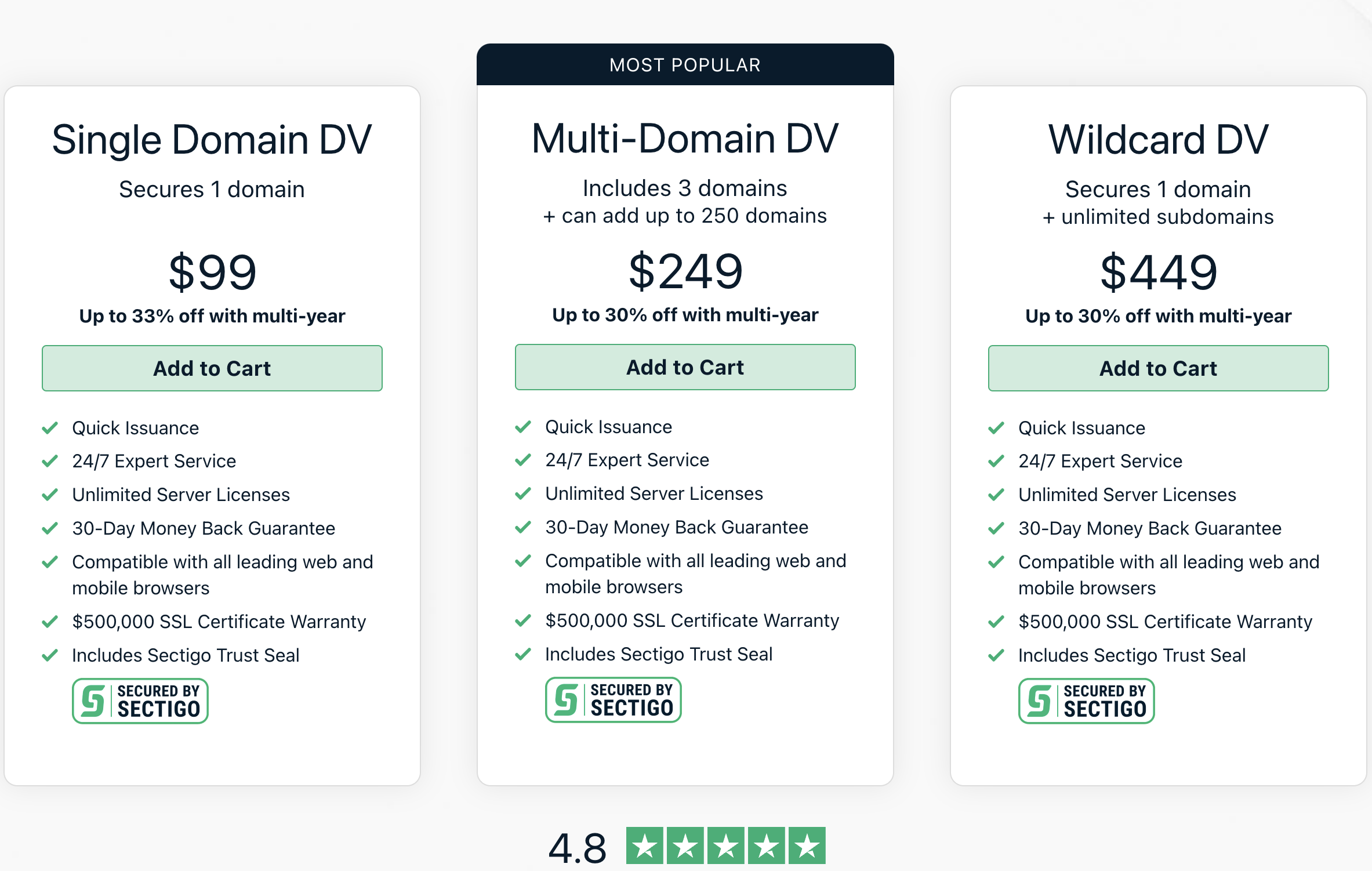

Before 2014, certificates used to cost real money. Companies charged for issuing them, with prices ranging from $10 to thousands of dollars a year. One of the cheapest providers out there is Sectigo / Comodo, whose pricing today looks like this:

As you can see, getting 20 certificates would cost us almost $2,000 per year! The second option, that allows us to include up to 250 domains is cheaper, as we’d need only $1,082 / year, almost half the price! Why? Because although the price above is $249, they have an additional fee of $49 / year for each additional domain. Finally, we can save 75% if we go for the “Unlimited domains” option. But why is that so cheap? Because in reality it’s unlimited domains that conform to a pattern. Basically it covers all subdomains of one name, whether you want it or not.

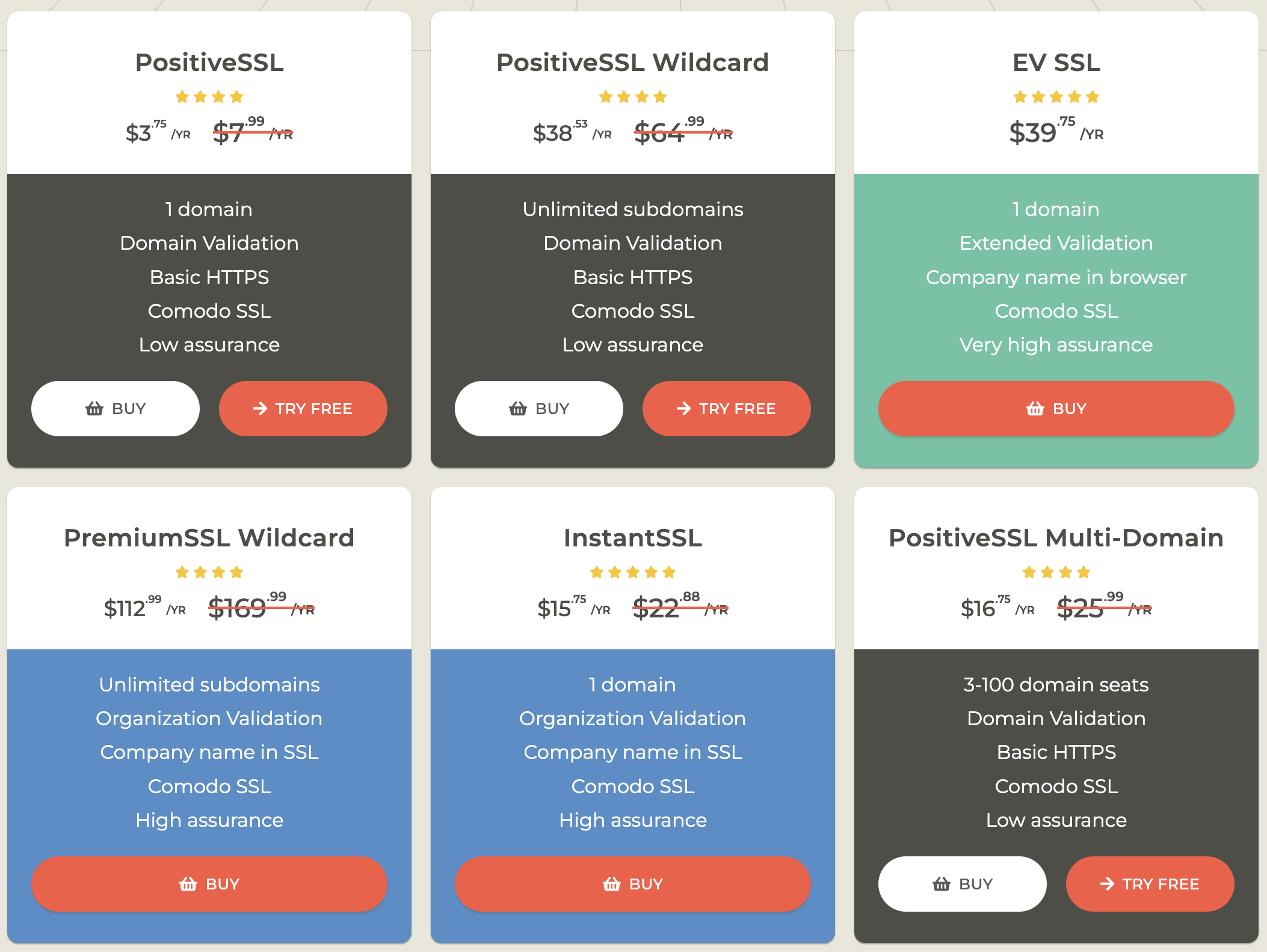

To be fair, almost nobody (I hope) buys from them directly, as going to resellers can have significant cost savings. My go-to choice is ssls.com which has a much better policy:

If you’re wondering what these “Organization Validation” or “Extended Validation” choices are, you can ignore them, they’re ancient relics of the past that we have to maintain for “compatibility” reasons ;)

All of the choices above produce the exact same security level from the exact same organization (Sectigo), and the only difference really is the amount of domains included.

Thankfully, in 2014, Let’s Encrypt and Cloudflare changed the entire industry for the better, by making certificates free! That means we can focus on pure Engineering, without worrying about another variable too :)

Let’s continue now by looking at the various options:

A word on Wildcard

First of all, let’s start with the “Wildcard” certificates. They include one

domain name, and all of its subdomains. For example, they can secure

daknob.net, and *.daknob.net, which means all the direct subdomains

such as www.daknob.net, or blog.daknob.net, but not

api.store.daknob.net.

My opinion is that they have very few uses, as they come with problems:

- They don’t cover subdomains of subdomains

- If you use multiple servers, you copy the private keys all over your infrastructure, and compromising one of them is enough to break the security properties of all your communications

- Issuing them is trickier to automate because of stricter requirements

- The most common way is adding a DNS record, which means that you now need to distribute a way to change your entire zone to all of your machines

With ACME issuing most of the world’s certificates using automation and far more security, I would argue that they should be avoided if possible.

Single-SAN or Multi-SAN?

Now let’s get to the original question. Certificates with one domain, or plenty?

The current best practice is to have one domain per certificate. This offers the most flexibility, as adding or removing domains can cause problems. Imagine covering 20 domain names, and one of them expired. Now the automation won’t be able to include it, it will stop renewing the existing certificate, and if there’s no monitoring, it will expire.

A worst case scenario would be someone buying the expired domain, not recognizing or not wanting you to have an associated public key they can’t control, and asking the issuer to revoke it. Now many clients going to your website will see scary errors from their browsers informing them something nefarious is going on.

There’s a great document from some industry experts on why one domain per certificate is actually better, so I will not go into that.

In this document, you can find a list of reasons of when one might consider adding many domains:

- Storage space is extremely limited (unlikely, because storage is cheap).

- The web host / service provider charges for certificates and you need to reduce that cost.

- You need to manage fewer certificates due to CA-enforced rate limits.

- Your server software only allows one certificate per virtual host, and the virtual host serves multiple domains.

With this post, I’d like to add another potential reason of why in very few environments, after careful consideration and good understanding the multi-SAN option may make more sense, or could actually even be necessary.

Modern Apps

Modern (web) apps are rarely self-contained, especially in very large companies with many teams working on a single product. These apps will have the browser make tens or hundreds of requests to various endpoints to function. They’ll fetch code from one place, images from another, fonts from elsewhere, etc.

Here are some examples:

+----------+----------------+

| Website | Unique Domains |

+----------+----------------+

| Gmail | 17+ |

+----------+----------------+

| YouTube | 16+ |

+----------+----------------+

| facebook | 8+ |

+----------+----------------+

| TikTok | 22+ |

+----------+----------------+

| Google | |

| Maps | 18+ |

+----------+----------------+

All of these websites made hundreds of HTTP requests to plenty of domain names. And each one of these requires a new connection from the browser. Let’s go over a few things now that matter for application reliability, performance, security, etc.

Bandwidth

One of the main arguments for single-SAN certificates is the bandwidth saved. A certificate that only needs one domain will be smaller than one that has 20. If we take an average of 20 bytes per domain, we’d expect a multi-SAN to be 380 bytes larger.

While that’s true, here are some caveats:

Some of the smaller ECDSA certificates are around 1 KB on the wire. These are ECDSA certificates issued from ECDSA CAs. Adding some low tens of domains can get them to 1.5 KB, but that’s nothing compared to having to fetch 15 of these certificates, at the cost of 15 KB. For RSA, the difference is even larger, as each single-domain certificate is 1.5 KB.

And it gets worse…

If you are using multiple certificates, you don’t just have to download the leafs, you have to download the intermediate certificates as well! This is a fixed 1-2 KBs regardless of the end certificate size, that is paid per TLS connection, per intermediate certificate. That now means we’re comparing 3 KBs with 37.5 KBs or 60 KBs, even if we assume a TLS handshake needs 0 bytes for all the cryptography and other protocol fields!

And it gets worse…

Although an 1.5 - 2 KB certificate is more than a TCP / QUIC packet’s worth of

data, it can usually be included in 2-3 packets on the wire. For the vast

majority of connections, 3 packets are sent sequentially without waiting for an

ACK from the other party, making the transmission take half an RTT!

This practically means that downloading a certificate with one domain, or one with a hundred domains will effectively be the same for the client. In fact, even if you only use 1-2 other domains within that certificate, you are better off.

These differences could amount to an order of magnitude more bits exchanged between the browser and the server.

Latency

From the observation regarding TCP behavior above, we can deduct that the latency of establishing a TLS connection is practically the same. Extra domains will probably make no difference on the network and will likely even come in the same amount of packets.

But that’s comparing getting one certificate with one domain, versus one with 100. If we are to use Google Maps, that takes 18 connections. This immediately translates now to 18 TLS handshakes versus fewer, or even 1, if all domains are in the same certificate.

Performing 18 handshakes, even if we parallelize them very well, will lead to massively more RTTs. It’s just 18 times as many packets to be sent, 18 times more cryptographic operations, 18 times more TCP handshakes, etc. We’ll also wait for other things too, like parsers. We need to fetch HTML (or HTTP headers) to know that we need all the other resources.

We can therefore conclude that using single-SAN certificates will make our Time To First Byte (TTFB) and other performance timings worse. How much worse? Here it’s more difficult to tell, as it depends on the application, the client, and many other factors, but I wouldn’t be surprised if we saw at least one order of magnitude more time. Since we’ll be paying now at least 5 more RTTs, in the ideal scenario, that can get out of hand.

CPU Performance

Performance here seems straightforward, right? If we make n TLS handshakes,

this is n times more expensive than 1, correct?

Although as a rule of thumb this may make sense, in reality it’s slightly different. This heavily depends on the client, and what its connection reuse policy is. It also depends on the algorithms used in the certificates.

The older algorithm, RSA, is cheap to verify, but expensive to sign things with. ECDSA is the opposite: very cheap to perform signatures, but verification is more expensive. We therefore have some disparity between what’s expected of the client, and what’s expected of the server.

If the client makes a new TCP and a new TLS connection per domain name, like in

the case of the single-SAN certificate, both the server or the client has to

perform n times more computational work.

If the client requests the TLS connection to be resumed for the additional domains, then the algorithm makes more difference. For RSA, this is very effective. There’s no need to do more of that again. For ECDSA however it’s marginally more expensive if not the same to have a new handshake than resume an existing session. Keep in mind this is purely for the cryptographic computation, ignoring all other code running, and of course bytes, milliseconds, etc.

If the same connection is reused, which may happen with multi-SAN certificates, it’s all free, and you never have to worry about anything.

Reality, however, is more complicated, especially when multiple machines come into play, and architectural decisions start to matter more.

If a multi-SAN ECDSA certificate split into 20 single-SAN certificates, you should expect an order of magnitude more CPU usage from all clients, while if you do that with an RSA certificate, that’s going to come with an order of magnitude more CPU usage for the server’s cryptographic operations.

Memory

Although for most of the TLS clients this is probably not a concern, we can observe significant changes in the server memory usage between the two models.

First of all, it’s the obvious one: if you store n times more certificates,

you’ll need n times more RAM. But for most web servers this will rarely get

over a few hundreds of MBs.

The real cost is at the TLS sessions. There’s just so many things to store about each one. State, cryptographic keys, tickets, etc. This can quickly add up.

Much like with CPU performance, this also depends on the types of certificates, server configuration, and client behavior. Even if we assume the best case scenario however for single-SAN certificates, one could expect an order of magnitude more RAM usage on the server for the part responsible for TLS connection handling (certificate storage and sessions).

Should we go multi-SAN?

Given all of the above, one might notice the orders of magnitude being lost left and right. That means multi-SAN is clearly the best choice, right?

Well, no. Go read that document I linked to again to understand all the pros (missing from this post). But also consider the following:

All of that matters at a large scale only. Most websites don’t have enough requests to have to worry about these things. A few extra GBs of bandwidth a month aren’t going to make a difference, or 10 more MBs of RAM.

It’s that scale that makes you consider other things as well, on top of convenience or agility.

If facebook decided to make all clients spend 10x the CPU cycles to fetch the home page, or open Messenger, this could translate to a noticeable energy usage uptick globally. It could drain users’ batteries faster. If I did it in my blog, nothing would happen.

If Google decided to do 10x more TLS handshakes now, this could affect the global Internet traffic. And if that brought 10x more OCSP requests, or 10x more CRL checks, that could cause even more traffic. None of that would matter if I did it on my paste service.

But there’s another reason why splitting multi-SAN certificates is more harmless for smaller websites too:

All of the domain names that we fetch resources from in the websites above are operated by the entity itself. Google is contacting its analytics service, its ads service, its font service, its thumbnail service, etc. Meta is doing the same as well. In a normal website, you’d get the HTML from that entity, the analytics from Google, the tracking from facebook, the ads from someone else, the comments from another party, and so on.

If you operate all dependencies yourself, it’s easy to group them together. Especially if it ends up being served by the same web server. Because only then can you get the benefits of multiplexing.

Advanced Matchmaking

This brings us to a last, more advanced topic. If you are one of these entities, and you don’t want to, or you can’t minimize the number of domains each app is using, what’s the best strategy for certificate issuance?

First of all, let’s get one thing out of the way: if a certificate includes multiple domains, and they don’t resolve to the same IP addresses, you’re not benefiting much. So if you have 300 servers, each having one website, you don’t get much from pooling them together in the same certificate.

For the larger entities out there this is far more complicated than it sounds. As you can see in my UKNOF 52 talk, in a large content provider network (like mine :P) you don’t always get the same IP address back for a given domain name, especially if you ask from different locations. DNS is used as an effective tool to distribute traffic across the world, dynamically, and probably based on existing and predicted network conditions.

As a cool party trick, you can try running the following command to see which location you’re probably being sent to:

$ dig +short -x $(dig +short google.com)

$ dig +short -x $(dig +short youtube.com)

$ dig +short -x $(dig +short facebook.com)

[...]

Usually the people that are managing the DNS server maintain some fine grained

control. They can send login.daknob.net to a different datacenter than

blog.daknob.net when there’s too much traffic, or an abnormal behavior within

the network. When they do that, there’s probably something suboptimal going on

that they’re trying to mitigate. It’s not a great time to increase their CPU &

RAM & Bandwidth usage by an order of magnitude without them understanding how

and why. It can cause cascading failures, red herrings in debugging, and can

amplify existing problems.

Also, you need to figure out which domains are typically loaded together. If you don’t want one large SAN certificate with all of your endpoints, and want to split them logically, you’ll need to decide which goes where.

If out of your 100 domains, you can find groups of 10 that are commonly used together, this is great! You can slowly start getting the best of both worlds.

Unfortunately, the real world is here for another wake up call. There will be services used from every web app (e.g. login, analytics, …). Which certificate do you add them to?

The easy answer is “all of them”. It’s perfectly fine to have 10 certificates with the same domain. But now you run into other issues: which one do you serve? The most popular one? Does your server software handle this gracefully? Does the distribution of your clients favor this behavior?

All of that, and many more, is to say that this is a complicated problem. There are so many trade-offs, so many “it depends"s, and worse of all you can’t easily discover the ground truth. You’ll likely never know which combination of variables is the most optimal. What happens in situations like this is that you settle for “acceptable”, knowing that a tiny change could make things so much better (and cheaper to run).

But even that could not be enough… If you ever find yourself in the SAN-splitting and SAN-grouping game, first of all, I’m sorry to hear that, but what you must also know is that all of these are moving targets. Web apps change, client and server behavior shifts, certificates and their requirements evolve, and any static solution will quickly go out of date. And you’ll be pleased to know that dynamic solutions come with more risks!

It is an exciting area where you can make incredible optimizations and have massive gains, but at the same time you need to be careful of overfitting for today at the expense of tomorrow. And since that’s a very important security & compliance dependency, you need to make sure everything keeps working well.

As a final note, and given my recent article on QUIC, I’ll just say that that’s another can of worms that I won’t even begin to cover here. As more and more traffic is shifting to it, it will become even more relevant.