L3 Hardware Offload on MikroTik CRS326

February 5, 2023

With the release of RouterOS 7.1, MikroTik added L3 HW Offload. But what is that?

In order for a router to deliver packets correctly, for each one of them that arrives in one of its interfaces, it needs to look up at the destination IP address, and then determine what is the right port that it should go out of.

In order to decide, it uses an internal table, the routing table. This contains the match between destination and interface. Some more advanced tables even contain the source as well, or other parameters to take into account. A simplified version looks like this, for IPv4:

1.0.0.0/8 -> eth0

2.0.0.0/8 -> eth1

192.168.1.0/24 -> eth4

This may seem easy, but there are some routers that may have to do this a lot of times per second. Usually a packet is around 1500 bytes, so if you are downloading a file that is 1.5 GB, this means you’ll need around a million packets to flow in one direction alone.

To sustain a download at 1 Gbps, your router has to be able to push around 100,000 packets per second (pps). And since the traffic is birectional, it may have to also process an equal number of packets going the other way. This isn’t something that should be taken for granted, especially for lower power CPUs that are typically found in smaller routers.

When we benchmark network devices, we typically don’t look at the bits per second, but at the packets per second. Here’s why: Imagine you have a bag of groceries on your kitchen floor. You need to pick the items out of it, and sort them in your kitchen. Some will go to the fridge, some to the cupboards, etc. With the current way Linux (and other OSes) work, you’re only allowed to pick one item at a time. When unpacking, whether you pick a chocolate bar, or a large bag of chips, you need the same amount of effort to take it out, look at it, and put it in the right place. However, in the case of the chips, the bag emptied much more (by volume) than it did with the chocolate bar.

We don’t want to benchmark how fast one can empty a bag, but how many items they can take out of it, sort them, and come back for more, in a unit of time. Within reason, if someone can do 10 large items per minute, they can also do 10 small items per minute too. Carrying a slightly larger object isn’t the bottleneck here.

You may wonder now why we’re only allowed to pick one item at a time. There are multiple reasons, but we also have software that can process a number of items at the same time, too. VPP for example works with groups of items. You pick 10 chocolate bars at the same time, and sort them together. Other approaches pick groups of the same item (e.g. all bags of chips) to optimize even further.

Today I am testing the CRS326-24G-2S+RM, a switch. It is equipped with a poor 800 MHz 98DX3236 CPU that is built on an ARM 32-bit architecture. Although this is a switch by design, MikroTik allows its use as a router as well, with some performance penalties. This device has 24 Gigabit ports and 2x10 Gigabit ports. When used as a switch, it can forward packets at full speed. But can it really route 24+20 = 44 Gbps? I ran some tests between its two 10G ports, which I connected to a traffic generator, to find out.

Unsurprisingly, when I am sending small (64 byte) UDP packets, the CPU can only do a sustained 98.8 Kpps of traffic.

Why is it so slow?

In the test above, I am using a single IPv4 address to send traffic to one IPv4 address, and I am using the same source port and the same destination port. This is like having a single bag to unpack. Usually routers prefer it (and are faster) when there’s more than one bag to unpack. For example, if they have multiple processors, they can assign one to each bag.

I tried to see what happens if I have one IPv4 address that is sending traffic to another IPv4 address, from all UDP source ports at random, to all UDP destination ports at random. Unexpectedly, the performance dropped to 27.9 Kpps. That means we’re doing a third of a Gigabit if these were large packets.

On their product page, MikroTik claims “343.5 Kpps”. How did they achieve

this? By performing a best-case test: IPv4, Many IPs to Many IPs, Many Ports to

Many Ports. To replicate this, I added two static routes: 10.100.0.0/16 in

one 10G port, and 10.200.0.0/16 to the other. I then tried to send as many

UDP packets as I could, with each packet coming from a random IP and port, and

going to a random IP and port. In this test, I managed to get 373.3 Kpps

into the switch. This is about the same, and a bit higher(!) than MikroTik’s

quoted figure. Interestingly, I was only getting 249 Kpps out, on the other

port. I’d consider the lower number as the target to size things for.

During all these tests I had all firewall, NAT, etc. disabled, to achieve the maximum throughput possible. If you pick up a six-pack of orange juice from your bag, and you have to cut the plastic wrap open, and then store each bottle individually, you’re slower. The same is true if you have to look at each item’s expiry date and throw away any bad food.

To summarize, here is the performance of the MikroTik switch as a table:

+--------+--------+----------+----------+---------+---------+

| Src IP | Dst IP | Src Port | Dst Port | pps in | pps out |

|--------+--------+----------+----------+---------+---------|

| 1 | 1 | 1 | 1 | 98,851 | 98,851 |

|--------+--------+----------+----------+---------+---------|

| 1 | 1 | 65536 | 65536 | 27,974 | 27,974 |

|--------+--------+----------+----------+---------+---------|

| 65536 | 65536 | 65536 | 65536 | 373,313 | 249,458 |

+--------+--------+----------+----------+---------+---------+

This is not great news. Granted, it is a switch that we are (ab)using as a router, but even in a best case scenario, this is hardly around 1 Gbps total. And when the switch has to route this amount of traffic, everything freezes: the UI is unresponsive, SSH doesn’t work (well), there are problems everywhere. And with 25 simple firewall rules, MikroTik claims it’s half as fast.

If you are running RouterOS 6, that’s the end of the story. However, almost 10 years later than its first release, the next version, RouterOS 7, was released, and with it came the promise of doing (much) better than that.

Have you wondered why as a switch this device can do 65.5 Mpps and as a router it is over 250 times slower? It’s because switching happens on hardware, inside the switch chip, while routing happens on the low performance ARM CPU. But if you take a look at the switch datasheet, it has some very limited L3 (routing) capabilities too! If you stay within some limits, and if MikroTik supports this, the hardware is perfectly capable of doing routing too.

This is what is called Layer 3 Hardware Offload. You offload Layer 3 (routing) to the hardware. This is how all big routers that glue the Internet together manage to handle all this traffic. And it turns out that this ability is making its way into cheaper and cheaper switches too. This one costs only CHF 150!

The limitations can be found here but I’ll try to summarize some of the important ones that apply to this particular switch. Other switches and MikroTik routers have different limitations or figures.

- You can only change the last byte of the MAC address of the port if it does routing

- You cannot do NAT

- You cannot have any firewall rules

- You can have up to 12k IPv4 routes

- You can have up to 3k IPv6 routes

- You can only have a single routing table

- You cannot use protocols like VRRP to achieve redundancy

- You cannot use VPNs / Tunnels that are terminated on the device

- You cannot have VLANs inside other VLANs (Q-in-Q)

It also seems that IPv6 is not a first class citizen, as IPv4 was added in version 7.1, while IPv6 had to wait until version 7.6 :(

Taking all of this into consideration, is it good? Can it improve performance?

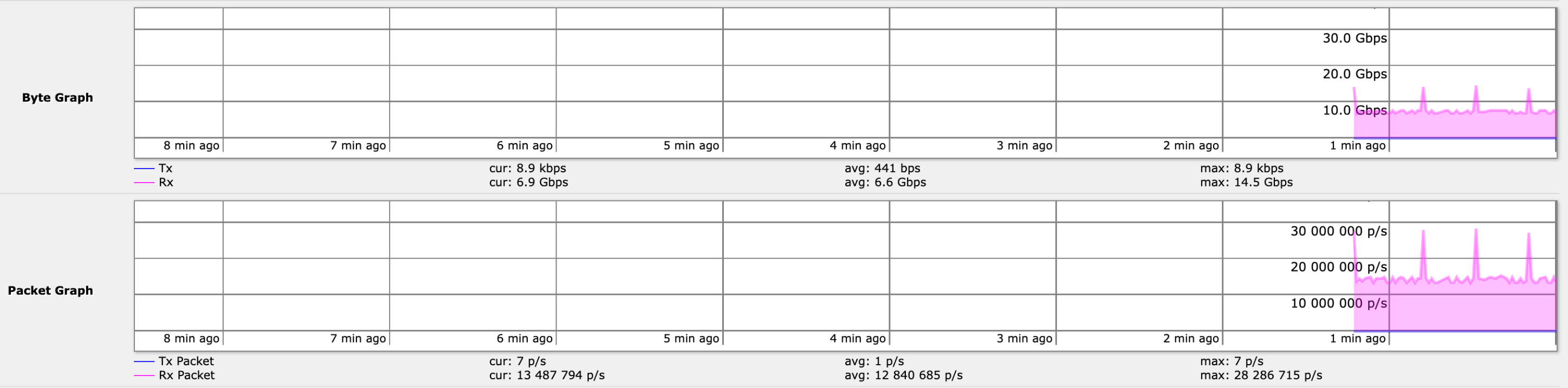

Definitely! I was able to route > 14.8 Mpps across its two 10 Gbps interfaces. This fully saturates the bandwidth of the port and it is the maximum limit 10 GbE can achieve. That’s 10 Gbps of 64 byte UDP packets!

Interestingly, the source and destination IPs & ports didn’t matter. The switch can route at full speed with a single IP address and port talking to a single IP address and port, or with 64k IPs and 64k ports talking to 64k IPs and 64k ports per IP. Moreover, IPv4 and IPv6 had about the same performance!

But is this stable? Yes! I was running this benchmark for about 8 hours and there wasn’t a single packet dropped. The switch chip was running at 69ºC idle when I began this test and after a few minutes of 14.8 Mpps L3 routing it went to 74ºC where it stayed until the end of the test.

Are there any further limitations? Unfortunately yes. There are some undocumented issues that I discovered. The first one is about metrics.

The bits per second and the packets per second only showed up on the physical interface in the UI and over SNMP. The IP interface (untagged VLAN interface) constantly reported 0 bps and 0 pps during this test, when L3 HW Offloading was enabled. When the routing was done on the CPU, everything was fine.

Another issue I found was that it couldn’t do 10 Gbps at 14.8 Mpps bidirectionally. During all of the tests above, I am sending traffic from one interface, and then receiving it in another. When I tried to send 14.8 Mpps from port A and receive it in port B, while at the same time sending 14.8 Mpps from port B to port A, to use both interfaces as full-duplex, and route 20 Gbps, I ran into a limit. I couldn’t do more than 22 Mpps, when the maximum would have been 30 Mpps. Take this with a grain of salt, as at these rates it’s getting more and more difficult to measure things accurately.

When monitoring the session on the Web UI (WebFig), I was also getting bps and pps spikes above the physical abilities of the interface. As I do not own a Microsoft® Windows™ computer, I don’t know if this also happens on WinBox.

In terms of the Gigabit ports of the switch, in theory it should be able to route across them with similar performance, however I have not tested that. I did not have 20x1G ports on my load generation server and I thought of using another switch as a 3x10G to 24x1G breakout but there were practical limitations :P

Allegedly MikroTik allows you to blackhole routes with a destination IP address, and I was able to sink 14.8 Mpps on the hardware without any CPU usage.

I also didn’t see any problems with 1 static route or 1000 static routes in terms of hardware offload. The performance was identical. The same was observed with ECMP, where it can support up to 8 destinations for the same prefix.

Finally, the hardware reduces the Hop Limit / TTL by 1, but it cannot generate an ICMP packet to notify the source if this reaches 0 and it is dropped. This leaves you with two options:

- You accept this behavior and this switch becomes invisible to traceroute, and does not inform hosts of dropped packets, or

- You ask the CPU to generate and send ICMP packets

The hardware can drop 14.8 Mpps of TTL 1 packets, but if you enable CPU generation and transmission of ICMP, then bad things™ happen.

All of the tests above were performed on a CRS326-24G-2S+RM Revision 2 running Software & Firmware RouterOS 7.4 and RouterOS 7.7 (for IPv6). The two 10G interfaces were connected with SFP+ SMF optics (10GBASE-LR) to a server with an Intel X710-DA4.

I am definitely looking forward to a future where more and more MikroTik products can utilize their hardware to the fullest extent possible and can offload critical processes there. RouterOS 7 apparently can receive kernel updates, and isn’t stuck on a 2012 kernel, so newer drivers and SDKs can be used. It looks like MikroTik went with Marvell as the vendor of choice, and I really hope it works out for them, as there are many great features waiting to be unlocked, such as VxLAN!

For CHF 150, L3 routing at line rate is amazing, despite the small TCAM space (only a few thousand routes can fit on the hardware, and the rest gets processed by the CPU). Now we’ll have to wait until MikroTik finishes their routing protocols, in a year or two, and gets them feature-complete and stable, and this will make of a very nice device for installations like wireless towers, etc. I can’t wait!